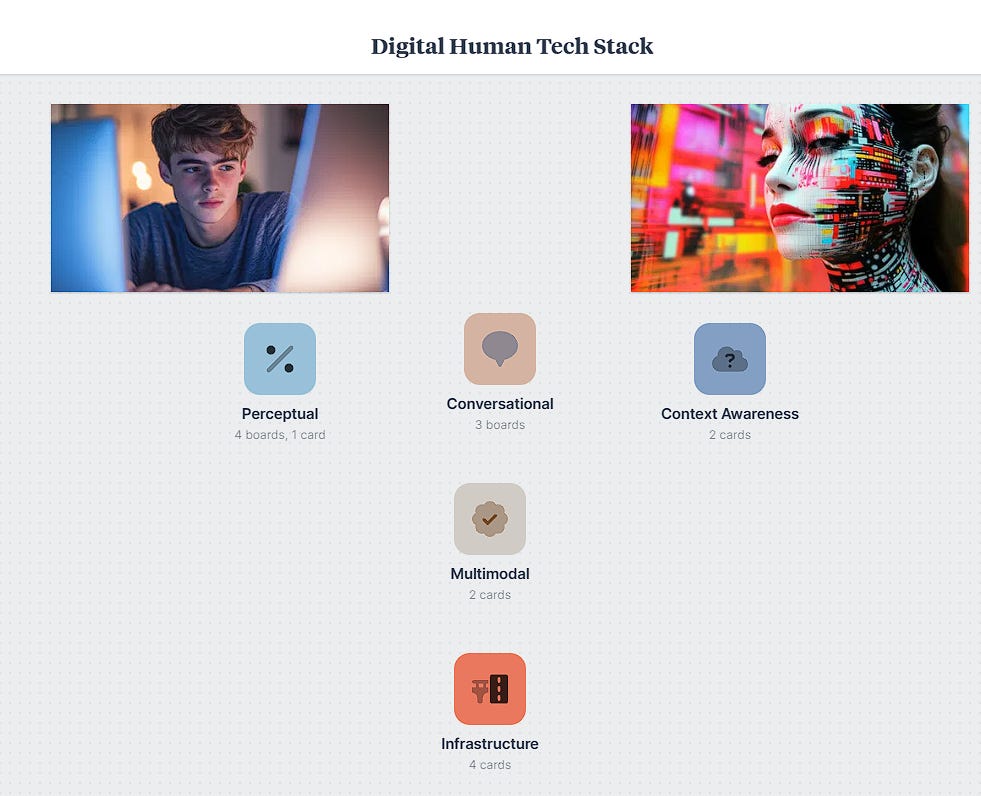

The Digital Human Technology Stack

Real conversations need more than voices

As of March 2025, we have effective audio-oriented conversations with AI. We can interrupt the audio overviews from NotebookLM with our own questions. There’s Grok range of conversational partners, with some rather unhinged personalities. Possibilities are emerging from research labs like Sesame, though the female voice sounds remarkably similar to that in NotebookLM. But Sesame’s male voice is distinctive.

The major AI tools have broad capabilities for implementing chatbots through APIs. ElevenLabs has nearly perfected the product delivery of voice-oriented AI tools.

Voice interactions with our devices are becoming normalized, but it’s like talking on the phone or participating in interactive radio. Over the last year, we’ve been hearing a lot of talk about the imminent rise of AGI that surpass humans capabilities in every domain. But is the AGI revolution faceless?

Throughout the past six months, we’ve started hearing more talk about humanoid robots from Tesla, Nvidia, and a range of venture-backed startups. Most of the talk is marketing spin telling us that every home will have a humanoid assistant to do the chores. Why do those devices need to be humanoid? They don’t. We already have machines automating many of the chores. But these companies have to sell us something that’s really expensive, nearly the price of a car. I have no doubt that, over the next decade, that humanoid robots will be technically feasible. But the big barrier to these robots will not be technical but regulatory. While AI is seemingly getting a pass on regulation for the next few years, robots are tangible products. The idea of a rogue robot suddenly physically harming young children is no one’s idea of an acceptable risk.

Meanwhile, developments continue in 3D graphics. Let’s talk about the digital human technology stack. I’m not talking about robotics but extended reality and virtual reality. It remains to be seen whether there will be a platform shift from smart phones, desktops, and laptop computing and toward broad adoption of headsets and advanced eyeglasses with augmented reality capabilities. Even if extended reality does not become the dominant paradigm in 5 years, though I think there’s a high likelihood of that possibility, realistic avatars will certainly be a part of many people’s lives, even if it’s only for gaming, media and entertainment.

What’s the essential technology stack required to create lifelike, interactive digital humans with conversational AI capabilities?

For the open-world interactive stories in VR that we want to develop, we need a structured framework that identifies core components for helping us think through the challenges for implementation and the possible innovations needed to bring these experiences to reality.

Start from the user perception and traverse down to the backend infrastructure.

Perceptual. Everything leads to this layer. We know it as the uncanny valley. If we don’t perceive digital humans looking and acting with natural facial expressions, realistic body movements, and clear speech, then the illusion doesn’t exist. (Let’s set aside, for a moment, that there will be many scenarios where we don’t want characters that are truly human-looking. Maybe the story calls for cartoon-like people or even animals.)

The Conversational layer brings us believable dialogue. Here is where a lot of progress has been made in the last year. We have the AI-enabled tools to understand what we say. We are increasingly getting tools that respond naturally with realistic speech and a bit of emotion. The ability of AI to adapt conversations by recognizing and expressing empathy is a matter of finetuning that will rapidly advance.

The Context Awareness layer remembers previous conversations, recognizes our preferences, and understands our virtual environment to respond naturally over time.

The Multimodal layer is about integration: combining speech, facial expressions, gestures, and emotional cues into unified, context-aware interactions.

The Infrastructure layer supports all these interactions by providing the cloud and edge computing resources, ensures privacy and ethical handling of user data, and continuously optimizes digital human interactions through performance monitoring and adaptive learning.

As you think about these technologies, keep the future in mind. A decade is not that far away. I know that’s hard to comprehend for many people, especially if you’re younger than 30. But in 10 - 15 years, you will understand that. The progress of time is why you want to work on problems that may take 5 or 10 years or more.

These key technologies all have interdependencies. The mechanics of specific technical implementations might change but establishing the concepts help us document, categorize, and analyze state-of-the art advancements and future developmental trajectories.