Night Listener DevLog #1

What happened in January 2026

FYI. While I’ve been exploring the use of AI for writing, I’m committing not to do that on this newsletter going forward. So, if this reads a bit sloppy, it’s because I wrote it without any editorial assistance from AI. Writing is a form of thinking, and this Substack is a place where I’m thinking through issues about generative AI, 3D, simulation, game engines, and mixed reality.

AI can be very valuable in writing, especially for editing your prose. But it’s too easy to lose your own writing voice and adopt the manner in which AI expresses a topic. LLMs often come up with a resounding phrase, which is tempting to insert here and there. I do use LLMs extensively everyday for brainstorming, research, and coding. Claude has become my daily driver, followed by Grok, and then Gemini. I’m cancelling my Pro subscription to ChatGPT but staying on the lower paid plan. Let’s get on with this month’s update.

I’m trying to stay focused on my one story. See my last post.

But what is Night Listener, you might ask. You thought I said I was working on Never Fear Tokyo??

Night Listener is a self-contained companion game to Never Fear Tokyo. Limited in scope, Night Listener is a project that I should be able to get done without getting sucked into the messy complexity of a larger environment.

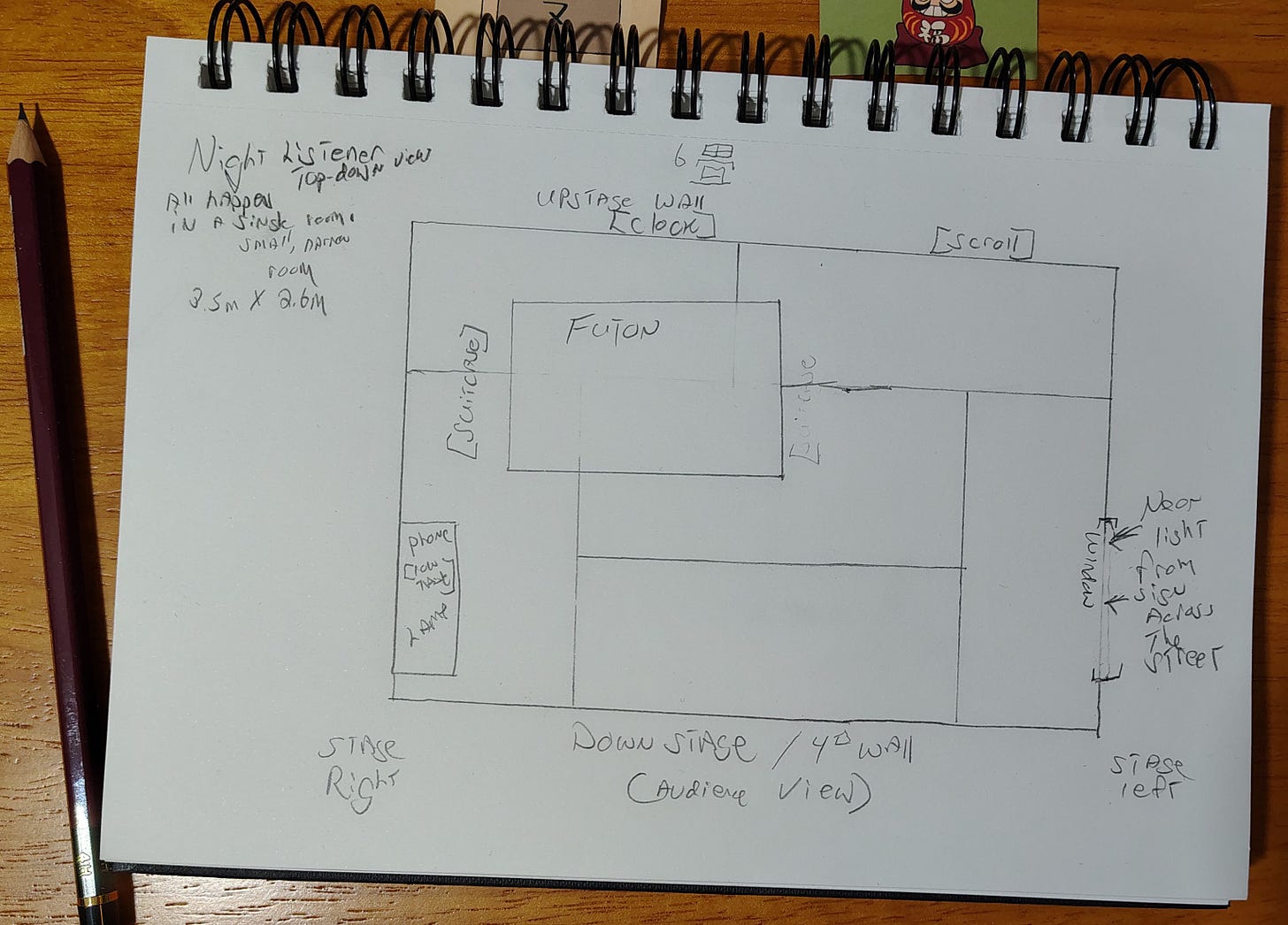

This game takes place in one room over one night with only one character.

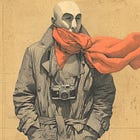

The character is Alex, the same photojournalist protagonist of Never Fear Tokyo, who has arrived to cover the 1964 Olympics. During this rainy night in his rented room, he overhears voices that he initially assumes are his neighbors. As the night progresses, the phrasing and tone of what he hears intensify to the point where he doubts where the voices are coming from, whether from neighbors or the supernatural. It’s a rough night for Alex as he visualizes shapes on the wall. Outside his window is a neon sign, typical of Tokyo; the neon casts color into the room. Throughout the night, the colors change and the sign changes to reflect words he’s hearing. Or, at least that’s what he imagines.

As the player, you have to figure out, along with Alex, what’s happening in that room that night, and what type of shape he (and you) will be in when the sun rises in the morning.

I have a set of game mechanics that I won’t go into at this point. In subsequent devlogs, I’ll label those as spoilers in case you’re interested in experiencing Night Listener.

The mechanics are documented in a game design document that’s roughly over 5k words. I did use ChatGPT and Claude to help brainstorm the game. But most of the key elements were ideas that I came up with on my own. For example, a couple of the key concepts I added that the LLMs didn’t come up with for the game:

Night Listener is an auditory experience. The voices, heard only in Japanese, are the key to unlocking what’s happening. Game mechanics help a non-Japanese speaker, which includes Alex, grasp the impact. But even if you know Japanese, the voices arrive in fragments that need assembling. The essence of Night Listener is an audio experience about not understanding and listening without context.

The player, like Alex, is a listener constrained to the specific space of a room during one night.

Obviously, I don’t expect this to be a blockbuster game! The appeal will be limited but if only a hundred people find this intriguing then I will have accomplished what I set out to with this small game. The more comprehensive Never Fear Tokyo is designed for a broader audience interested in narrative-driven games.

A defining aspect of both games is that they’re presented as a stage play rather than a full 3D world. That also was my own idea and not one provided by AI. You’ll initially view Night Listener as if you’re sitting in the audience of a theater and watching a play. Take a look at the concept art for this post, and recognize how it resembles a stage. Night Listener could work equally as well as a play dramatized on stage or as a short story. I’m highly interested in transmedia. But the game provides capabilities not possible through other media. As you engage with Night Listener the game, you’ll realize that you can switch to Alex’s first-person POV that unlocks capabilities not seen from the audience’s third-person POV.

Voice as Pedagogy & Research Testbed

Which of my three “big ideas” does this relate to? Voice as pedagogy. But I’m being very careful that Night Listener is not an educational game for learning Japanese. That would be a bit boring. But it is helping me learn the language.

The expressions and utterances that Alex hears over the night form a cohesive story in itself, though not told in order. The story is one I wrote nearly 20 years ago while living in Buenos Aires. I’m translating the text, which is mostly dialogue, into Japanese.

For the voices in Japanese, I’ve been experimenting with ElevenLabs, which has cloned voices of native speakers from Japan. There are also other software more finetuned for Japanese. But I probably will end up contracting with voice actors. The expressiveness of AI-voices is still lacking. And that’s an area I’m tracking with my research.

Night Listener provides a benchmark for measuring acting expressiveness through AI voices. With significant effort, one probably could modify AI voices to the extent that they would be suitable for my purposes. But this is a case where it would be easier and quicker (thought not cheaper) to hire voice actors. Based on my experiences and knowledge of generative AI for audio, we should have fully expressive generative voices in the major languages by the end of 2027. That’s a very conservative estimate but note the emphasis on fully expressive. For many purposes, you can do that now. And I will be using Night Listener, even after I finish this game, as an evaluation tool for benchmarking the acting quality of generative audio.

Voice acting is not a good career option for anyone in the mid-21st century. (Is any career a good option going forward?) People who want to pursue voice acting will need to start authoring their own stories and bringing their own characters to life while also figuring out how to monetize their efforts. There will be audience for that! But voice actors should not expect that their future income will come from publishers and for-profit companies that currently contract voice actors. Yet, there will be opportunities for publishers and studios that state they only use human voice actors; but I expect that segment to be very small since businesses will seek to optimize their expenses.

Working with Unreal Engine

I already mentioned the concept art for Night Listener.

I made the first pass at a gray blockout in UE. Before I settled on the stage-like aspect for Night Listener, I thought about a realistic room for 1964 Japan with tatami mats. Rooms in Japan are sized based on tatami mats. A 6-mat tatami room would be about 10 sqm.

The sketching was a useful exercise, especially for thinking about how the character moves within a constrained space, but a game environment doesn’t need to be realistic. It needs to be plausible.

I’m developing in Unreal Engine 5.7. I’ve been working with UE for more than 5 years, and I still learn something new every time. I’ve been diving into Substrate, which is UE’s latest approach to materials.

If you’re on X, you’ve might have seen short clips of people creating games with world models, or people creating games for themselves with tools like Claude. Will we continue to need game engines like Unreal Engine? I think so, and I’m writing an essay to explore that topic. Eventually, there will be an integration of world models and game engines.

Language learning

Some might wonder if there’s a need to learn a language if AI can translate for us via earbuds or smart glasses. While that tech will be useful, many people will still embrace learning a language in order to fully integrate into a community with native speakers of wherever they’re at. I’ve used Google Translate quite effectively in Vietnam while conversing with Vietnamese who don’t speak English. But, after a while, you run into limitations, especially with the difficulty of ‘small talk’, the casual banter that form a friendship. Developing a deep relationship through a translation app is impossible. Besides, language learning has tremendous cognitive benefits.

There are a massive number of YouTube channels dedicated to language learning. Combined, those channels have an audience in the millions. AI translation has its place but people will still continue learning languages. I do question whether teaching a foreign language in a classroom setting has a role in future years. When language teachers start using words like preterite and imperfect subjunctive, they’re already on the path to failing as a teacher. Instead, teach grammar as patterns to express what you’re trying to say. No one is standing on a street corner attempting to talk to someone and thinking, “Oh, in this moment, I need to use an intransitive verb.” Students struggle enough to learn the language; they don’t need to be confused with academic grammatical terminology.

AI offers some really great methods for language learning in a personalized context.

Learned some Japanese. As for my own progress in Japanese, I’ve started to recognize some words when watching a Japanese-language show. I’m understanding the structure of the language and sentence patterns. I feel that I’m on the path and have built confidence that I can learn Japanese.

Isn’t learning a language with a different character set challenging? Yes. Russian was the first foreign language I learned. (That’s an unusual choice for an American who knew nothing other than English; my high school offered Spanish but my speech impediment made me very insecure about taking an elective Spanish class though I wanted to do so.) My college required a third-year foreign language course for the bachelor’s degree.

I expected at the time to go into a career in international relations, which I wished I had done but I lost focus and got sidetracked. It was the mid-1980s and the cold war was still in full bloom. On the very first day of class, my Russian professor introduced Cyrillic. That was our focus for the first week, and it only took a week to learn Cyrillic. (I ended up taking 8 courses in Russian before graduating.)

So, if you want to learn Japanese, start with learning Hiragana and Katakana. That’s 92 characters with some additional variations on those characters for pronouncing certain sounds. Some sounds in the scripts overlap to produce 71 standard sounds in the Japanese language. (It’s still a lot easier than English despite Japanese having more sounds to learn.) What about the thousands of Kanji? Focus first on Hiragana and Katakana, especially Hiragana. Phonetically, everything in Japanese can be written in Hiragana, though it’s not from a practical perspective. The latter is where the concept-based Kanji comes into use.

I found these letter blocks on Amazon. It’s really helpful for assembling words in a tactile manner for learning the character sets. One side of the block has Hiragana, another side Katana, another side has a Kanji character, etc. (The photo below only shows Kanji.)

I’m immersing myself as much as possible in hearing Japanese; Netflix has a lot of Japanese shows. Japanese reality dating shows are very good, much better than their counterparts in other countries. The Japanese express a kindness and courtesy in their dating shows that are absent from American dating shows. In American dating shows, there are always these buff guys (talking loudly) looking for the chance to strip off their shirt at every moment. That doesn’t happen on Japanese dating shows. I highly recommend Offline Love, which is set in Nice, France. The participants travel from Japan to France. The concept of the show is that they must lock away their digital devices and rely only on handwritten letters and communicating in person. It’s a throwback to life before the 1990s when you couldn’t tell someone you would be late for lunch because there was no texting or calling on mobile phones.

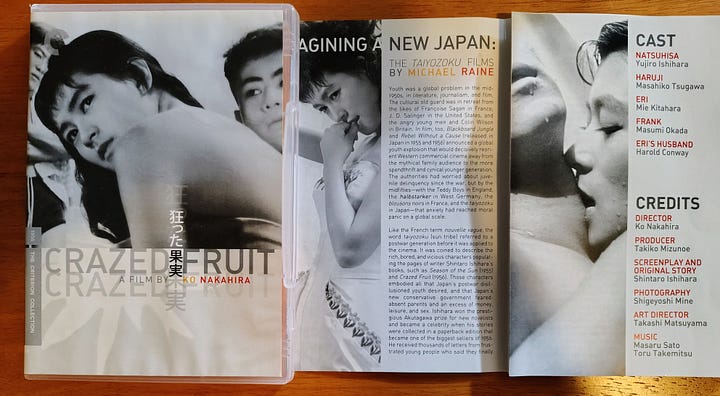

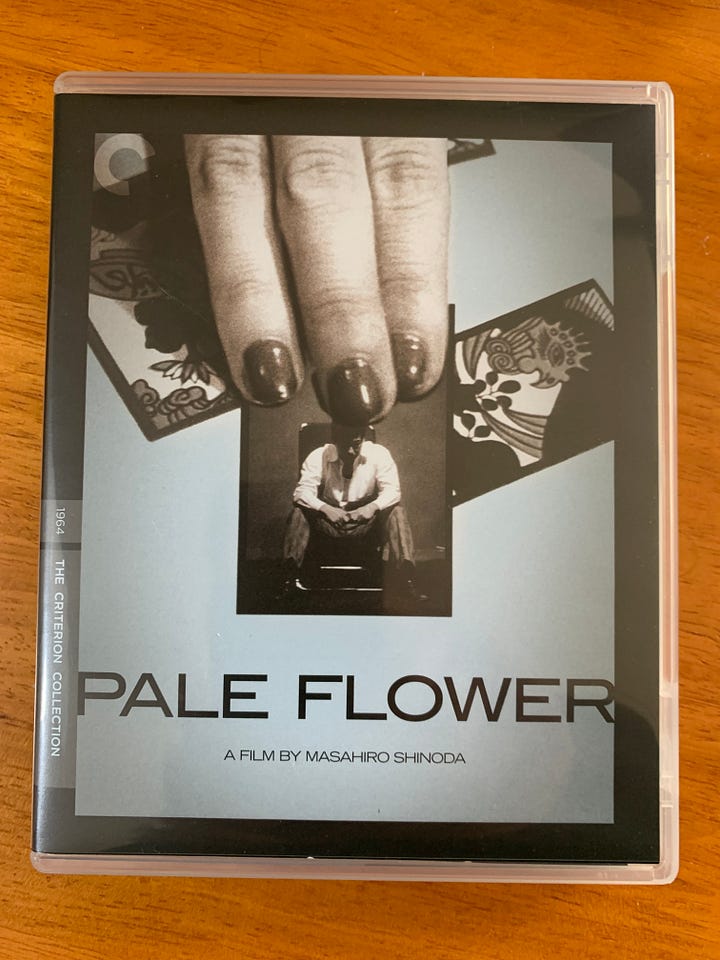

About 5 other Japanese dating shows consumed my viewing time in January. I also found time for two movies: Pale Flower and Crazed Fruit. These are directly related to my theme of 1960s Tokyo.

I strongly recommend Pale Flower. That’s a great movie.

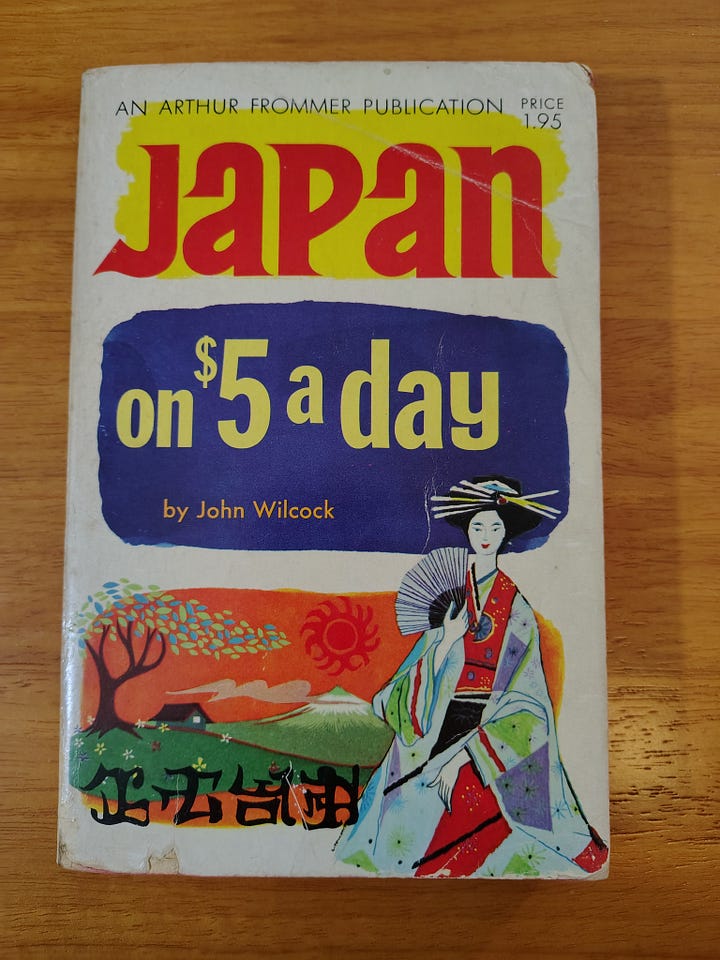

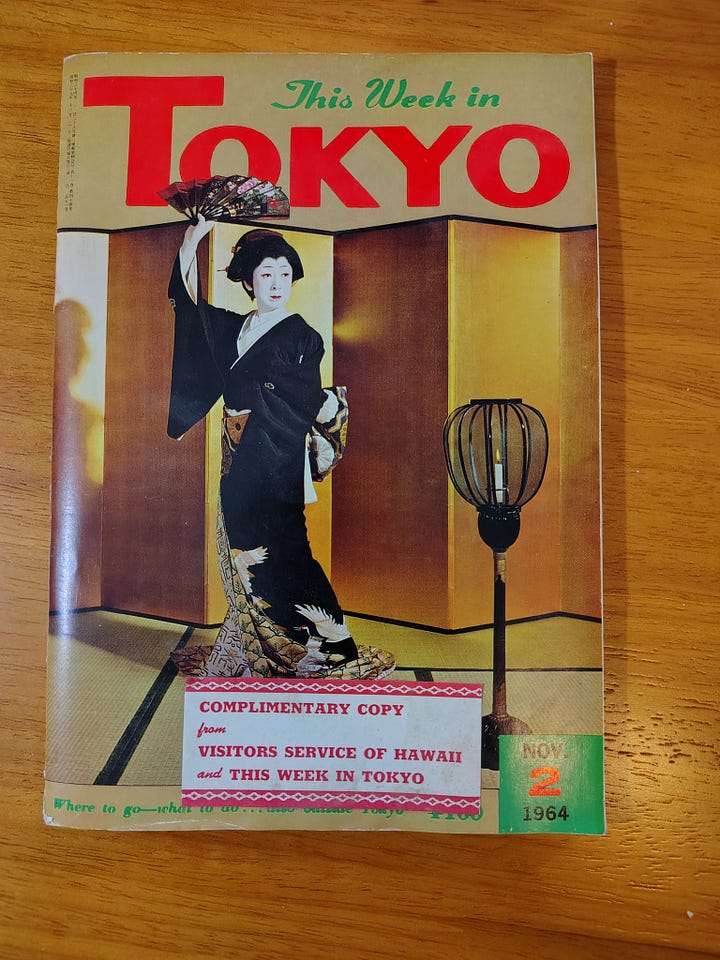

I also found two books on eBay that will help tremendously with developing Never Fear Tokyo .

Japan on $5 a Day (1964 edition) and This Week in Tokyo, which covers the week of November 2, 1964. The latter is a remarkable find! Never Fear Tokyo is set during the 1964 Tokyo Olympics, which happened in October. I think I’ll shift the storyline to be the week just after the Olympics ended, which is the week of November 2, now that I have a detailed magazine on events and places during that week in 1964.

Big picture time

I’ve restructured my life to explore the new genre of storytelling that is filmic and interactive. Note the emphasis on ‘new genre’. This is not a replacement for movies, though many movies will use generative AI in the process just as movies have used special effects for a long time now. This genre is a hybrid between movies and games. Generative AI provides capabilities not possible in either medium. Extend that experience into virtual reality and you’ll arrive in the metaverse. This metaverse concept, which is still relevant, will not be broadly adapted for another decade. The tech still needs to mature, and we’ll need to wait for better devices than Meta’s Quest to come to market.

Will that ever happen? Yes. While the market evolves, major developments are happening in research labs across the world. Technical reports and research papers are constantly being published that describe new techniques in generative AI for scene development and 3D. We’re also seeing a lot of venture capital flowing into world models, which offer an interactive experience similar to games (though with a lot more limitations).

One of the things pushing off developments in VR hardware is an emphasis in the market on robotics. Honestly, I’m not ready for humanoid robots. But I would appreciate a robotic lawnmower or a device to sweep the cobwebs from the corners of my ceiling every so often. We’ve had robotic vacuum cleaners for a while now, but I would drop some serious cash for a robot that can deep clean my house and fix any maintenance problems that come up.

From a research perspective, I’ve chosen to ignore robotics. I can’t go down every rabbit hole. But I will go deep into world models, which are used to train robots. Of course, I’m more interested in the entertainment aspect of world models.

Among the questions I’m examining in February: what does interactive video, developed via world models, mean for game development?